Published Games

These games are available for purchase!

$5.99

INFO

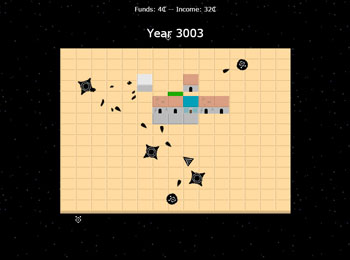

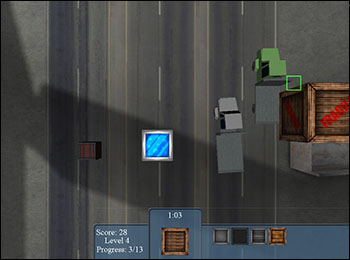

(2019) As the director of your own space shipping company, design and optimize a galactic trade network to efficiently move goods to where they're needed.

Tools

Tools for game development or just entertainment.

DOWNLOAD

INFO

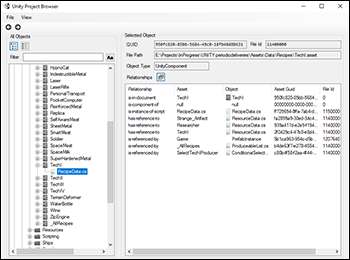

(2018) An application for discovering if and where certain assets are referenced in a Unity project.

USE NOW

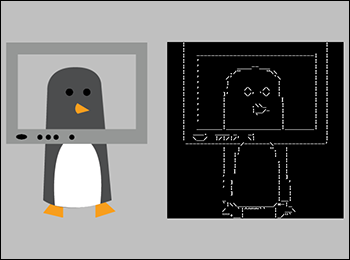

(2018) This web tool creates ASCII-art conversions of raster graphics using an edge-detection algorithm.

USE NOW

(2017) This web tool generates infinitely varied pixel art sprites from scratch - currently supporting swords and potions.

USE NOW

(2015) This web tool generates several different classes of random names, such as video game names, mineral names, and British town names.

Professional Games

Games I worked on with a large team.

Jam Games

These games were made in an extremely limited time (usually 48 hours).

DOWNLOAD

INFO

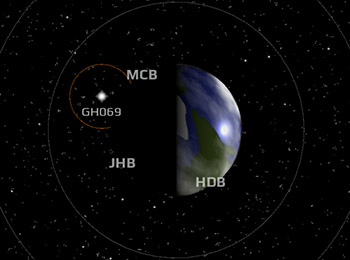

(2023) Direct space traffic in near-earth orbit, preventing collisions and sending ships where they need to go.

DOWNLOAD

INFO

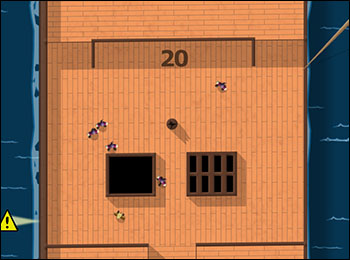

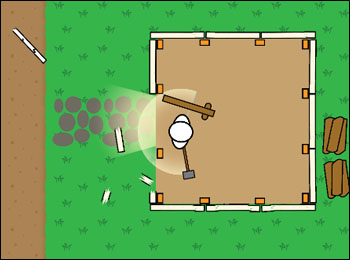

(2020) When the local factory is causing sickness to sweep through town, you know there's only one thing a man called The Wrecker can do.

PLAY NOW

DOWNLOAD

INFO

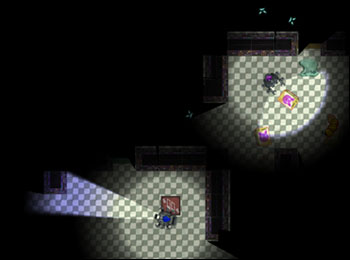

(2017) A two-player local co-op game. Beep and Boop must work together to fill orders in a dark, haunted convenience store.

PLAY NOW

INFO

(2016) An old-school point-and-click adventure. Solve puzzles and escape from the facility you've been imprisoned in.

PLAY NOW

INFO

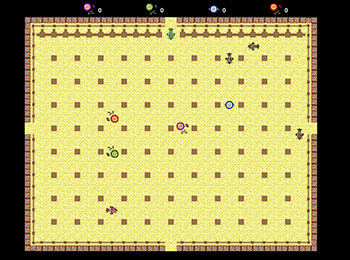

(2014) Establish trade routes between planets and solar systems, manufacture products, and build a shipping empire in your browser.

DOWNLOAD

INFO

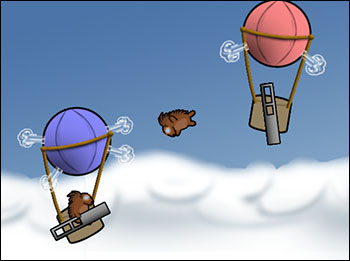

(2013) Play against your friends and see who is the best Helicopter Matador in this humorous, competitive game.

Other Games

PLAY NOW

INFO

(2017) Grow a horde of zombies and fight other players in this always-on top-the-leaderboard game.

PLAY NOW

INFO

(2014) Explore an online, abstract sandbox world, and build your own structures for others to find.

PLAY NOW

INFO

(2012) A challenging and highly replayable tile-swapping puzzle combining Conway's Game of Life and Rock-Paper-Scissors.

DOWNLOAD

INFO

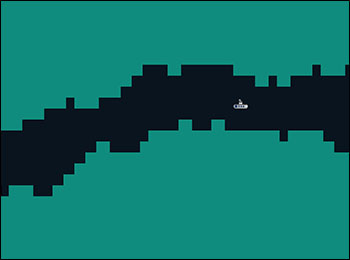

(2011) This is a clone of the flash game Helicopter. How far can you sail through a narrow, winding cavern without hitting the walls?

Archived Games

These games are no longer available.

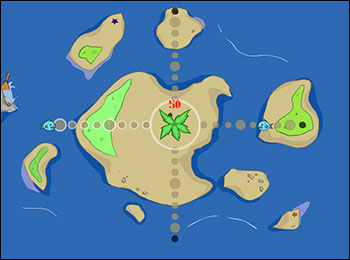

INFO

(2012) Defend your island from grooving sea creatures in this rhythm-based arcade game with generative music.